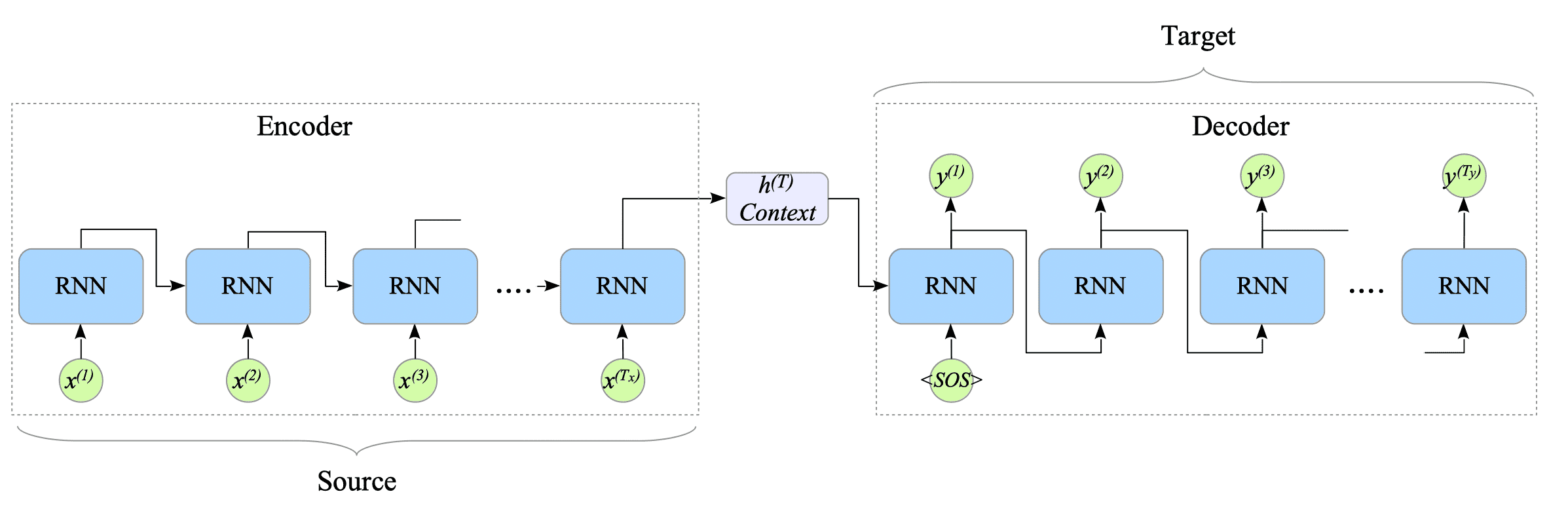

13. Machine Translation with Encoder-Decoder Model

The encoder-decoder model is an architecture for sequence-to-sequence (seq2seq) tasks, where both the input and output are sequences of data. Introduced in the paper “Sequence to Sequence Learning with Neural Networks” in 2014, these models excel at learning relationships between different data sequences, like translating text from one language to another.

The model consists of two parts: an encoder and a decoder, each built using recurrent neural networks (RNNs). The encoder processes the source language input (e.g., Spanish), while the decoder processes the target language output (e.g., English).

Fig.13-1: Encoder-Decoder Model (Training Phase)

The final hidden state of the encoder holds crucial information about the source language input. In fact, the sentiment analysis model in the previous chapter used the final state for classification tasks. Therefore, it is named the context vector or simply context, emphasizing its rich representation of the essence of the input sequence.

The following sections will delve deeper into the concept and implementation details of machine translation using the encoder-decoder model.

13.1. Training and Translation

13.2. Implementation