9.4. TensorFlow, PyTorch and Keras

This section presents the code implementation for the GRU neural networks using TensorFlow, PyTorch and Keras frameworks.

9.4.1. TensorFlow Version

Complete Python code is available at: GRU_tf.py

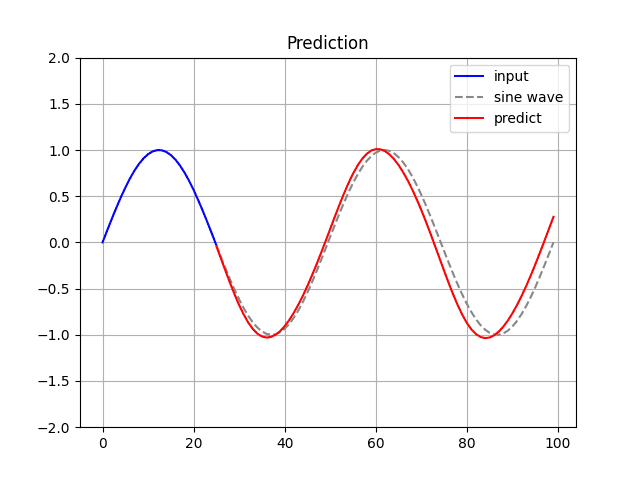

The GRU class is composed of tf.keras.layers.GRU and tf.keras.layers.Dense.

class GRU(tf.keras.Model):

def __init__(self, hidden_dim, output_dim):

super().__init__()

self.gru = tf.keras.layers.GRU(

hidden_dim,

activation="tanh",

recurrent_activation="sigmoid",

kernel_initializer="glorot_normal",

recurrent_initializer="orthogonal",

)

self.dense = tf.keras.layers.Dense(output_dim, activation="linear")

def call(self, x):

x = self.gru(x)

x = self.dense(x)

return x[1] Create dataset.

n_sequence = 25

n_data = 100

n_sample = n_data - n_sequence # number of sample

sin_data = ds.create_wave(n_data, 0.05)

X, Y = ds.dataset(sin_data, n_sequence, False)

X = X.reshape(X.shape[0], X.shape[1], 1)

Y = Y.reshape(Y.shape[0], Y.shape[1])[2] Create model.

input_units = 1

hidden_units = 32

output_units = 1

model = GRU(hidden_units, output_units)

model.build(input_shape=(None, n_sequence, input_units))

model.summary()

lr = 0.001

beta1 = 0.9

beta2 = 0.999

criterion = losses.MeanSquaredError()

optimizer = optimizers.Adam(learning_rate=lr, beta_1=beta1, beta_2=beta2)

train_loss = metrics.Mean()[3] Training.

# ============================

# Training

# ============================

lr = 0.001

beta1 = 0.9

beta2 = 0.999

criterion = losses.MeanSquaredError()

optimizer = optimizers.Adam(learning_rate=lr, beta_1=beta1, beta_2=beta2)

train_loss = metrics.Mean()

n_epochs = 300

for epoch in range(1, n_epochs + 1):

with tf.GradientTape() as tape:

preds = model(X)

loss = criterion(Y, preds)

grads = tape.gradient(loss, model.trainable_variables)

optimizer.apply_gradients(zip(grads, model.trainable_variables))

train_loss(loss)[4] Prediction.

$ python GRU_tf.py

Model: "gru"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

gru_1 (GRU) multiple 3360

dense (Dense) multiple 33

=================================================================

Total params: 3,393

Trainable params: 3,393

Non-trainable params: 0

_________________________________________________________________

epoch: 1/300, loss: 0.651

... snip ...

9.4.2. PyTorch Version

Complete Python code is available at: GRU_pt.py

[1] Create dataset.

n_sequence = 25

n_data = 100

n_sample = n_data - n_sequence # number of sample

sin_data = ds.create_wave(n_data, 0.05)

X, Y = ds.dataset(sin_data, n_sequence, False)

X = X.reshape(X.shape[0], X.shape[1], 1)

Y = Y.reshape(Y.shape[0], Y.shape[1])[2] Create model.

if torch.cuda.is_available():

device = torch.device("cuda")

elif torch.backends.mps.is_available():

device = torch.device("mps")

else:

device = torch.device("cpu")

print(device)

class GRU(nn.Module):

def __init__(self, input_units, hidden_units, output_units):

super().__init__()

self.gru = nn.GRU(input_units, hidden_units, batch_first=True)

self.dense = nn.Linear(hidden_units, output_units)

nn.init.xavier_normal_(self.gru.weight_ih_l0)

nn.init.orthogonal_(self.gru.weight_hh_l0)

def forward(self, x):

h, _ = self.gru(x)

y = self.dense(h[:, -1])

return y

input_units = 1

hidden_units = 32

output_units = 1

model = GRU(input_units, hidden_units, output_units).to(device)

summary(model=GRU(input_units, hidden_units, output_units), input_size=X.shape)

lr = 0.001

beta1 = 0.9

beta2 = 0.999

criterion = nn.MSELoss(reduction="mean")

optimizer = optimizers.Adam(

model.parameters(), lr=lr, betas=(beta1, beta2), amsgrad=True

)[3] Training.

# ============================

# Training

# ============================

n_epochs = 300

history_loss = []

for epoch in range(1, n_epochs + 1):

train_loss = 0.0

x = torch.Tensor(X).to(device)

y = torch.Tensor(Y).to(device)

model.train()

preds = model(x)

loss = criterion(y, preds)

optimizer.zero_grad()

loss.backward()

optimizer.step()

train_loss += loss.item()

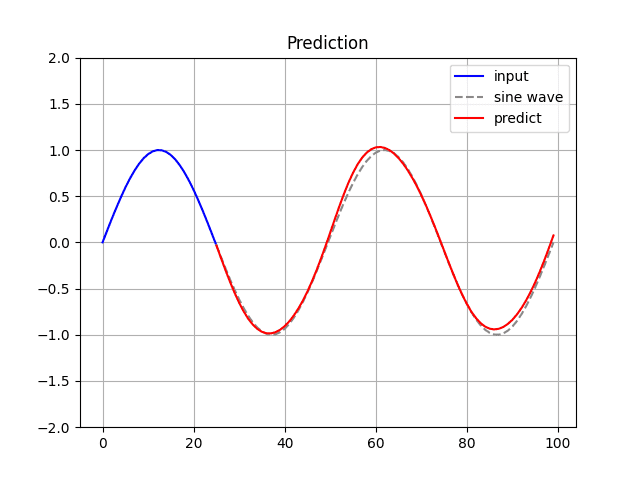

history_loss.append(train_loss)[4] Prediction.

$ python GRU_pt.py

mps

==========================================================================================

Layer (type:depth-idx) Output Shape Param #

==========================================================================================

GRU [75, 1] --

├─GRU: 1-1 [75, 25, 32] 3,360

├─Linear: 1-2 [75, 1] 33

==========================================================================================

Total params: 3,393

Trainable params: 3,393

Non-trainable params: 0

Total mult-adds (Units.MEGABYTES): 6.30

==========================================================================================

Input size (MB): 0.01

Forward/backward pass size (MB): 0.48

Params size (MB): 0.01

Estimated Total Size (MB): 0.50

==========================================================================================

epoch: 10/300, loss: 0.593

... snip ...

9.4.3. Keras Version

Complete Python code is available at: GRU_keras.py

[1] Create model.

# ============================

# Model creation

# ============================

lr = 0.001

hidden_dim = 32

input_dim = 1

output_dim = 1

model = Sequential()

model.add(

GRU(

hidden_dim,

activation="tanh",

recurrent_activation="sigmoid",

use_bias=True,

batch_input_shape=(None, n_sequence, input_dim),

return_sequences=False,

)

)

model.add(Dense(output_dim, activation="linear", use_bias=True))

model.compile(loss="mean_squared_error", optimizer=Adam(lr))[2] Training.

# ============================

# Training

# ============================

n_epochs = 100

batch_size = 5

history_rst = model.fit(

X,

Y,

batch_size=batch_size,

epochs=n_epochs,

validation_split=0.1,

shuffle=True,

verbose=1,

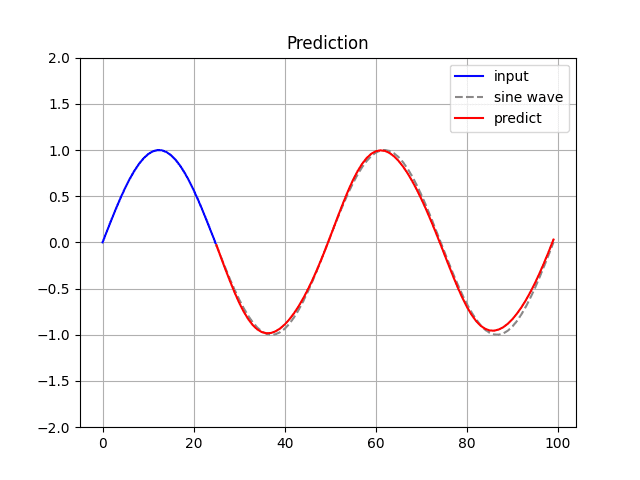

)[3] Prediction.

$ python GRU_keras.py

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

gru (GRU) (None, 32) 3360

dense (Dense) (None, 1) 33

=================================================================

Total params: 3,393

Trainable params: 3,393

Non-trainable params: 0

_________________________________________________________________

Epoch 1/100

... snip ...