14. Attention Mechanism

The attention mechanism is a technique that enhances the performance of neural networks.

The basic idea of the attention mechanism is simple. It is a dynamic weighting system that depends on the context and focuses on relevant parts of the input during processing. In simpler terms, it acts like a dynamic cheat sheet.

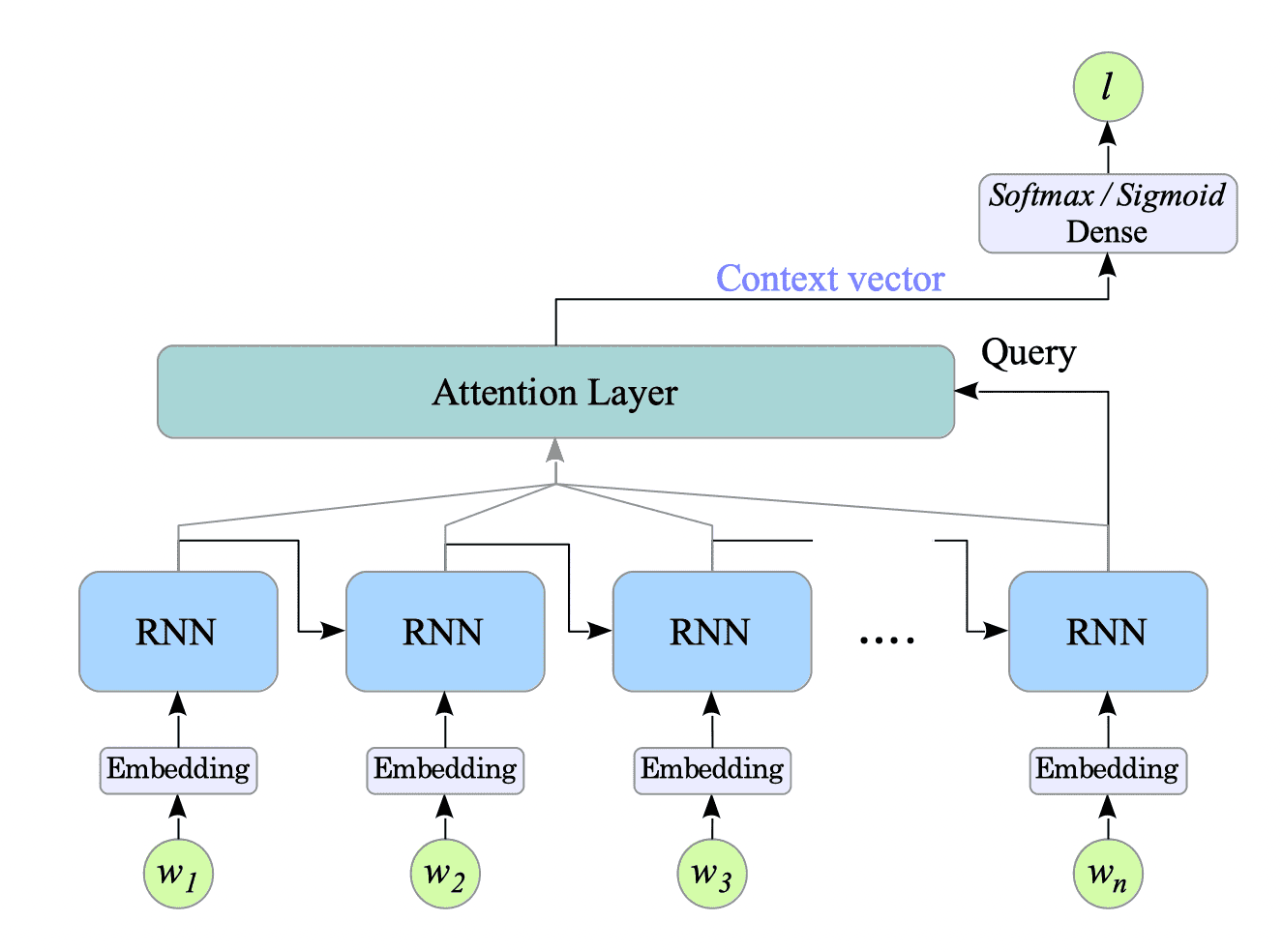

In sentiment analysis, for example, after sufficient training, the model leverages the context vector from the attention layer instead of the final hidden state, because the attention layer can provide a richer context vector that captures the entire context of the input sentence. See Fig.14-1.

Fig.14-1: Basic Concept of Attention Mechanism

This chapter provides the following topics in detail: